Dynamics of Real-Time Simulation – Chapter 3 – Dynamics Errors for Fixed-Step Integration Methods

3.1 Introduction

Error analysis of numerical integration algorithms is traditionally based on the residual error terms in the Taylor series expansion representing the integral. Although such error measures do establish the dependence of the error on both the integration step size and the magnitude of the appropriate higher derivative, they do not lend much insight into the estimation of solution errors when applying a given integration algorithm to a specific problem. Indeed, comprehensive error analysis can only be accomplished when the differential equations being integrated are linear, which is seldom the case in most practical applications.

Fortunately, as we have pointed out in Chapter 1, the nonlinear differential equations can often be linearized about some reference or equilibrium solution, at least for purposes of approximate error analysis. If, in addition, the integration step size is taken to be constant (this is invariably the case in real-time simulation of dynamic systems and can be approximately true over a number of integration steps when a variable-step method is used), then the Z-transform theory introduced in Chapter 2 can be applied. This in turn allows analytic formulas to be developed for the errors in quasi-linear system characteristic roots and the gain and phase errors in the quasi-linear system transfer functions. These specific error measures have already been defined in Chapter 1, Eqs. (1.45), (1.47), (1.49), (1.50), (1.53), (1.54) and (1.55). They will depend on the integration step size as well as the particular integration algorithm, and can be directly related in a meaningful manner to the overall solution accuracy in simulating dynamic systems.

In this Chapter the characteristic root and transfer-function gain and phase errors are developed in convenient asymptotic form for a variety of integration methods. For single-pass (or equivalent single-pass) integration algorithms we will show that the characteristic root errors and transfer-function gain and phase errors can easily be written for any order of linear system based on knowledge of the gain and phase errors of the algorithm in performing simple isolated integration. The frequency-domain methodology developed in this chapter not only allows quantitative comparison of the dynamic performance of various integration methods, but it also points the way to developing improved algorithms in specific applications.

3.2 Error Measures for Euler Integration

We now illustrate the methodology for deriving the error measures defined in Chapter 1 by considering the most simple of all integration methods, Euler or rectangular integration, when applied to the solution of the first-order linear system described by the state equation

(3.1) ![]()

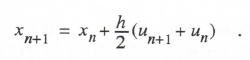

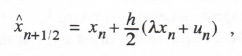

This is the example we considered earlier in Chapter 2 to illustrate the Z-transform method. If we use Euler integration to solve Eq. (3.1) numerically, the following difference equation is employed:

(3.2) ![]()

3-1

where h is the integration step size and xn = x(nh), un = u(nh) Taking the Z transform of Eq. (3.2), we have

(3.3) ![]()

For x0 = 0 (zero initial condition) we obtain the following formula for the digital system Z transform:

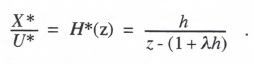

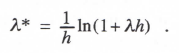

(3.4)

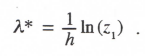

As noted in Eq. (2.22), the pole, z1 = 1 + λh, of H*(z) is related to the equivalent characteristic root, λ*, by the formula

(3.5) ![]()

from which

(3.6)

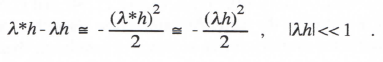

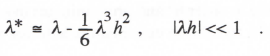

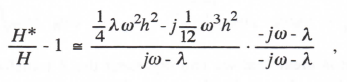

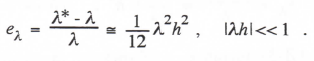

We can derive an asymptotic formula for λ* when |λh| << 1 by letting z = eλ*h = 1 + λ*h + (λ*h)2/2 + ··· in the denominator of Eq. (3.4). Setting the denominator equal to zero and retaining terms up to h2, we obtain

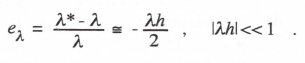

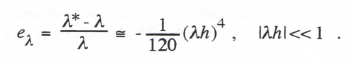

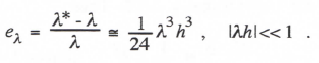

This gives us the following formula for the fractional error in characteristic root:

(3.7)

This is just the result we obtained earlier in Eq. (2.24) by expanding the log function of Eq. (3.6) in a power series. Here we have obtained the asymptotic formula for eλ directly from the denominator of the system Z transform. This is also the method we will use later in this chapter in the case of higher-order integration methods, where it is not possible to derive analytic formulas for the poles of the system Z transform.

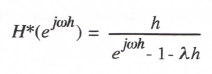

Next we derive the asymptotic formula for the fractional error in the digital system transfer function for sinusoidal inputs. From Eq. (2.39) we have

(3.8)

for the digital system transfer function. From Eq. (2.40) the continuous system transfer function for sinusoidal inputs is

(3.9)

3-2

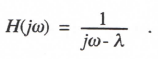

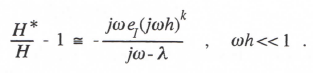

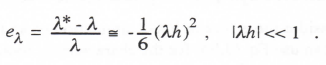

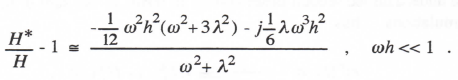

From Eqs. (3.8) and (3.9) we can write the exact formula for H*/H – 1, the fractional error in digital system transfer function. In trigonometric form the formula is given in Eq. (2.41). To derive a simpler asymptotic expression when ωh <<1 , we expand the exponential function in the denominator of Eq. (3.8) in a power series and retain terms of order h2. In this way we obtain

(3.10)

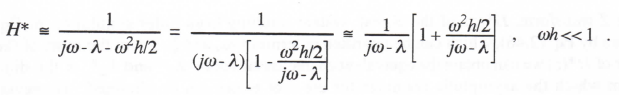

Noting that 1/(jω – λ) = H from Eq. (3.9), we can write the following formula for the fractional error in digital system transfer function:

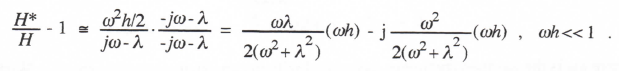

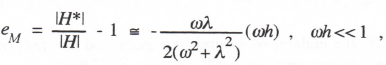

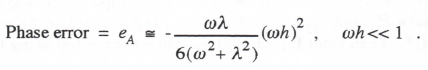

Comparison with Eqs. (1.53) and (1.54) leads directly to the following asymptotic formulas for the transfer function gain and phase errors:

(3.11)

(3.12)

As expected, the transfer function gain and phase errors using Euler integration vary as the first power of the integration step size h.

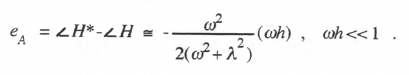

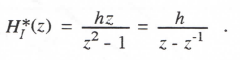

Reference to Eq. (3.1) shows that when λ = 0, the linear first-order system represents a pure integrator. The corresponding Euler integrator transfer function, HI*, can be written directly in asymptotic form by setting λ = 0 in Eq. (3.10). In this way we obtain

(3.13)

From Eq. (3.13) it is evident that Euler integration behaves approximately as an ideal integrator (transfer function =1/jω) with an additional phase lag of ωh/2, which in turn corresponds to a pure time delay of h/2.

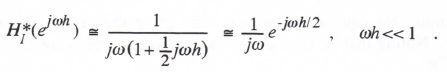

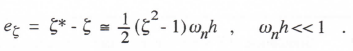

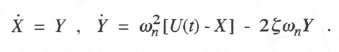

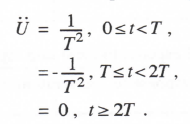

We consider next the case where the characteristic root (eigenvalue) is complex. This will occur in simulating an underdamped second-order linear system, such as the mass-spring-damper system given by Eq. (2.42). In terms of the undamped natural frequency ωn (= √K/M) and the damping ratio ζ(=C/2√KM) Eq. (2.42) can be rewritten as

(3.14)

3.3

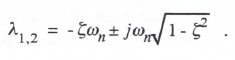

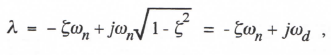

For the underdamped case, ζ < 1, and the characteristic roots λ1 and λ2 are given by

(3.15)

The Z transform, H*(z), of the digital system resulting from Euler simulation of Eq. (3.14), is given by Eq. (2.46), which can be rewritten in terms of ωn and ζ. From the roots of the denominator of H*(z) we can obtain the equivalent characteristic roots, λ1* and λ2*, of the digital system, from which the asymptotic formulas for the root errors can be obtained. However, a simpler procedure is to use Eq. (3.7), as obtained for Euler simulation of the first-order system, but with λ complex. Thus we let λ in Eq. (3.7) be given by

(3.16)

where ωd is the damped frequency associated with the continuous-system root λ. With Eq. (3.16) for λ, Eq. (3.7) becomes

(3.17)

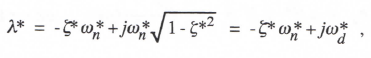

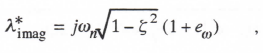

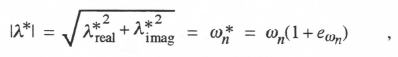

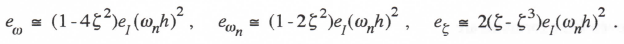

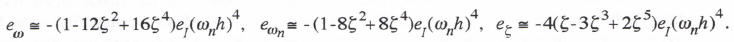

In terms of ωn* and ζ*, the undamped natural frequency and damping ratio, respectively, of the equivalent digital system characteristic root λ*, we can write λ* as

(3.18)

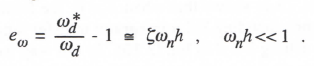

where ωd* is the damped frequency associated with λ*. Comparison of Eq. (3.17) with (3.16) shows that ζωnh in Eq. (3.17) represents eω, the fractional error in the damped frequency of the digital root. Thus for Euler integration

(3.19)

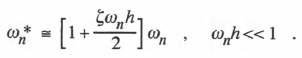

To compute the equivalent damping ratio ζ* of the digital system root, we must first determine ωn*. Noting that |λ*| = ωn*, we can derive the following asymptotic formula for ωn* from Eq. (3.17):

(3.20)

It follows that eωn the fractional error in undamped natural frequency of the digital root as defined

previously in Eq. (1.49), is given for Euler integration by

(3.21)

Equating the real part of λ* in Eq. (3.17) to – ζ*ωn* with ωn* given by Eq. (3.20), we obtain the following asymptotic formula for the damping ratio error when using Euler integration:

3-4

(3.22)

From Eqs. (3.19), (3.21) and (3.22) it is evident that the error parameters associated with complex characteristic roots when using Euler integration all vary as the first power of the integration step size h.

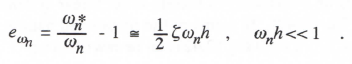

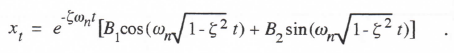

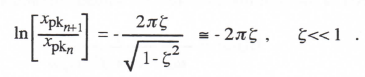

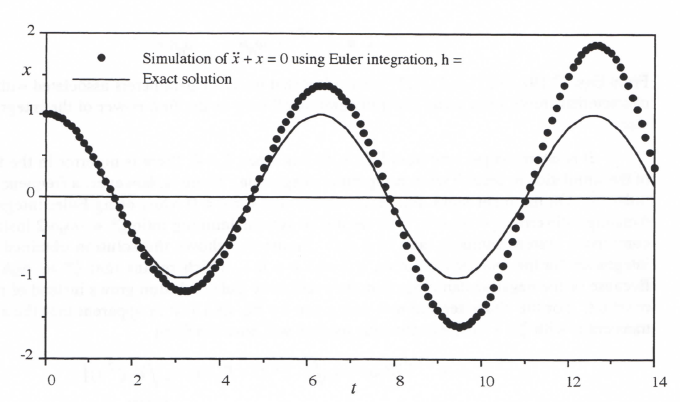

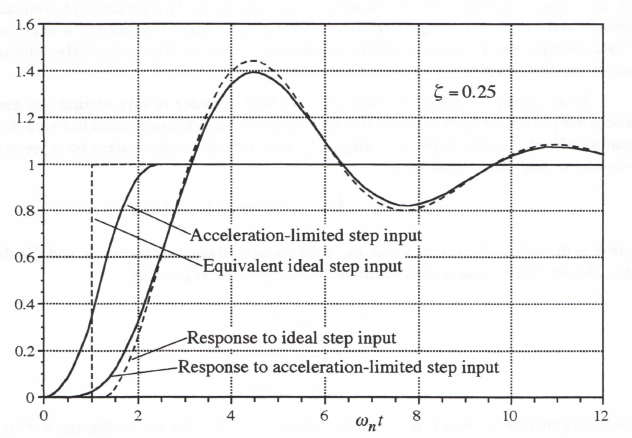

It is interesting to note from Eq. (3.19) that when ζ = 0, there is no error in the frequency of the simulation to order h when using Euler integration. There is, however, a frequency error of order h2. On the other hand, Eq. (3.22) shows that for ζ = 0 when using Euler integration, the damping-ratio error eζ – ωnh/2. Thus the digital system damping ratio ζ* – ωnh/2 instead of the continuous system damping ratio of ζ = 0. Figure 3.1 shows the solution obtained by Euler integration for the specific case of ωn = 1 and h = 0.1, which means that ζ* – ωnh = -0.05. Because of the negative damping ratio, the oscillatory Euler solution grows instead of remaining constant. For the characteristic root pair given by Eq. (3.15) it is apparent that the associated transient x1 with ζ < 1 for the continuous solution will have the form

(3.23)

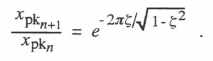

Since the period T of the oscillatory transient is given by 2π/(ωn√1 – ζ2) , it follows that the ratio of successive peaks in the oscillatory transient, as reflected by the change over one cycle in the exponential amplitude factor e-wig, will be given by

(3.24)

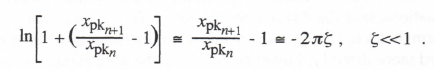

Taking the log of both sides, we obtain

(3.25)

For ζ < < 1 it follows from Eq. (3.25) that xpkn+1 xpkn and therefore | xpkn+1/xpkn-1| << 1.

Under these conditions we can rewrite Eq. (3.25) as

(3.26)

In the Euler simulation in Figure 3.1, we have seen above that ζ* -0.05 for ωnh = 0.1, according to Eq. (3.22). Letting ζ* = ζ = -0.05 in Eq. (3.26), we find that xpkn+1/ xpkn – 1 0.1π = 0.314. Thus we expect a fractional increase of approximately 0.314 each cycle of the oscillatory transient when the damping ratio is -0.05. This is indeed confirmed in the solution shown in the figure. Clearly Euler integration is not a very efficient algorithm for simulating an undamped second-order system.

We next consider the transfer function errors when Euler integration is used to simulate the second-order linear differential equation given by

3-5

Figure 3.1. Undamped second-order system simulation using Euler integration.

1

(3.27) ![]()

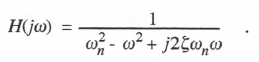

The transfer function of the continuous system for sinusoidal inputs becomes

(3.28)

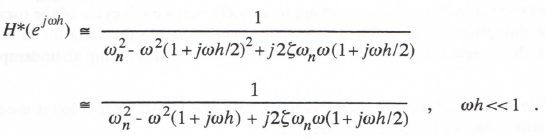

We could, of course, use the same procedure we employed earlier in analyzing the transfer function errors in the case of Euler integration of the first-order linear system, namely, write the difference equations, take the Z transform to obtain H*(z), and then from H*(ejwh) determine the asymptotic formula for the fractional error in digital system transfer function. The error formulas can be obtained more directly, however, by using the asymptotic representation of Eq. (3.13) for the transfer function of the Euler integrator. Thus we replace jω in Eq. (3.28) by jω (1 + jωh/2). In this way we obtain

|

(3.29)

From Eq. (3.29) the following asymptotic formula for the fractional error in transfer function follows directly:

3-6

(3.30)

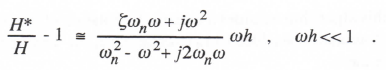

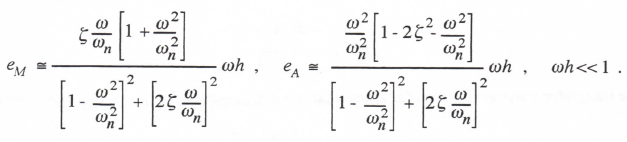

After rationalization to obtain the real and imaginary parts of H*/H – 1, the following formulas are obtained for the fractional error in transfer function gain and the phase error for Euler integration applied to a second-order linear system:

(3.31)

Again we note that the transfer function gain and phase errors for Euler integration, applied here to the simulation of a linear second-order system, are proportional to the first power of the integration step size h.

3.3 Error Measures for Single-pass Integration Algorithms in General

In the previous section we developed characteristic root and sinusoidal transfer function error formulas for Euler integration of first and second-order linear systems. We used procedures in deriving these formulas that can be generalized to any single-pass integration method. By a single-pass method we mean an integration algorithm which requires only one evaluation of each state-variable derivative per overall integration step. Any of the Adams-Bashforth predictor methods, as well as the trapezoidal and other implicit methods introduced in Chapter 1, qualify as single-pass methods. Although the Adams-Moulton predictor-corrector methods of Chapter 1 require two passes per integration step, they still produce asymptotic errors identical to those of the equivalent implicit methods. This is because the local integration truncation error associated with the predictor pass, as described in Section 1.2, is of order k + 1 for a kth-order Adams-Moulton method and will therefore not contribute to the global error of order k for the overall algorithm. On the other hand the Runge-Kutta methods represent multiple-pass algorithms for which the asymptotic errors are not equivalent to those of any single-pass method. This is because the intermediate passes have local truncation errors of order k or less for kth-order Runge-Kutta methods.

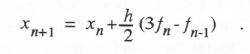

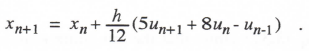

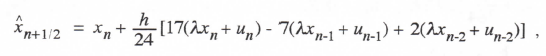

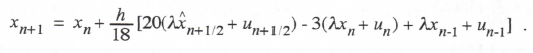

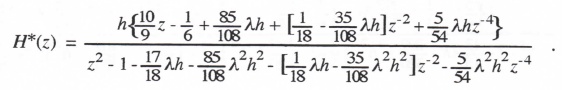

With single-pass algorithms all of the asymptotic formulas for the characteristic-root and transfer-function errors can be written directly once the approximate formulas for each individual integrator sinusoidal transfer function are known. Eq. (3.13) represents this formula in the case of Euler integration. As a next example we consider second-order Adams-Bashforth (AB-2) integration. From Eq. (1.12) the AB-2 difference equation for simple integration of the equation dx/dt = f(t) is given by

(3.32)

3-7

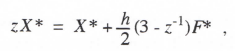

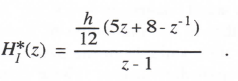

Based on fn and fn-1 this algorithm assumes a linear extrapolation of f(t) from t = nh to (n+1)h. The area under this extrapolation is added to xn to compute xn+1. Taking the Z transform of Eq. (3.32) with x0 = 0, we have

(3.33)

from which the following formula is obtained for the AB-2 integrator Z transform:

(3.34)

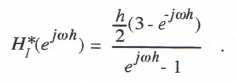

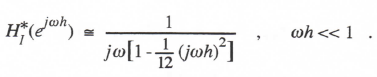

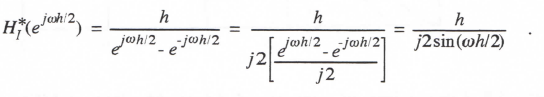

The integrator transfer function for sinusoidal input data sequences is given by HI*(ejωh). Thus

(3.35)

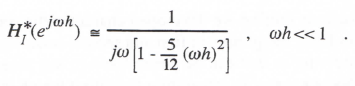

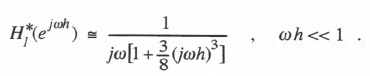

If we represent the exponential terms in Eq. (3.35) by power series and retain terms to order h3, then the following asymptotic formula is obtained for the AB-2 integrator transfer function:

(3.36)

Comparison with the ideal integrator transfer function of 1/jω shows that the AB-2 integrator behaves approximately like an ideal integrator except for a fractional gain error equal to (5/12)(ωh)2. To order h2 the AB-2 integrator phase error is zero.

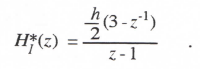

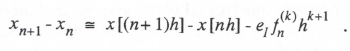

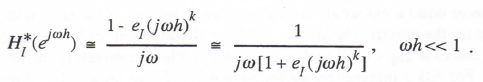

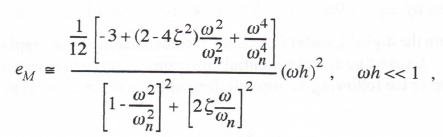

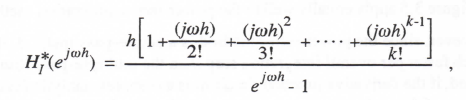

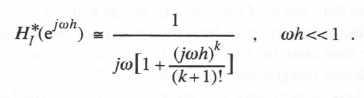

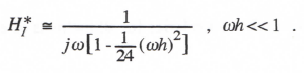

We will now show that for any kth-order numerical integration algorithm the asymptotic formula for the integrator transfer function takes the form

(3.37)

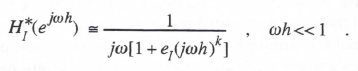

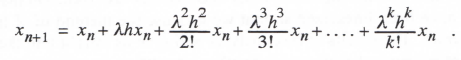

In Section 1.2 we have seen that an appropriate Taylor series expansion for a kth-order integration algorithm can be used to derive the following formula for xn+1 – xn:

(3.38)

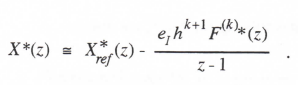

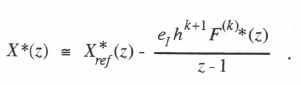

Here x[(n+1)h] and x[nh] represent the exact solution of the continuous integral of f(t) at times (n+1)h and nh, respectively, and the term –eI f hk+1 represents the local truncation error associated with the integration method of order k, where f is the kth time derivative of f at t = nh. For example, Eq. (1.7) in Section 1.2 shows that k = 1 and eI = 1/2 for Euler integration; for trapezoidal integration k = 2 and eI = -1/12, as determined earlier in Eq. (1.10). To develop formulas for the integrator transfer function for sinusoidal inputs we take the Z transform of Eq. (338) and divide by z -1 to obtain

3-8

(3.39)

Here X*ref (z) is the Z transform of the exact solution, x[nh]. Next we consider the case of sinusoidal data sequences by replacing z with ejωh. We also note that F(k)*(ejωh) = (jω)k F* (ejωh), i.e., the Fourier transform of the kth derivative of a function is equal to the Fourier transform of the function multiplied by (jω)k. After dividing the resulting expression by F*, we have

(3.40)

The term X*/F* is simply the sinusoidal transfer function, HI* (ejωh), of the numerical integrator. The term X*ref /F*=1/jω, the transfer function of an ideal integrator. If we now approximate ejωh -1 by jωh, Eq. (3.40) can be rewritten as

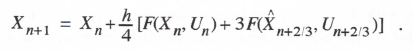

Thus Eq. (3.37) is verified as representative of the general asymptotic form for the transfer function of a numerical integrator of order k. Comparison of Eq. (3.37) with Eq. (3.13) shows that for Euler integration, k = 1and eI = 1/2. Comparison of Eq. (3.37) with (3.36) shows that for AB-2 integration, K = 2 and eI = 5/12 . Table 3.1 on page 3-51 of this chapter presents summary formulas for the integrator transfer function error coefficient eI for predictor, predictor-corrector, power series and implicit integration algorithms up to order K = 4.

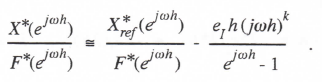

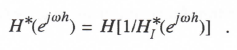

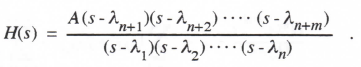

For any single-pass integration algorithm it is straightforward to show that the Z transform of the digital system that results when the algorithm is used to simulate a linear system with transfer function H(s) is given by

(3.41) ![]()

i.e., the argument s in H(s) is simply replaced by 1/HI*(z). This is because HI*(z) is the digital equivalent to 1/s for the continuous system and therefore 1/HI*(z) is equivalent to s. In the same way, the digital system transfer function for sinusoidal inputs for any single pass integration algorithm used to simulate a continuous system with transfer function H(jω) is given by

(3.42)

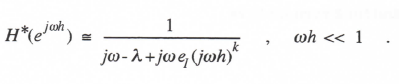

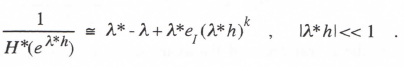

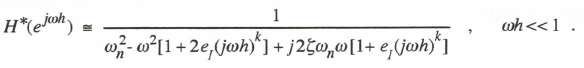

In particular, let H(s) = 1/(s – λ) and H(jω) = 1/(jω – λ). Using Eq. (3.37) to represent HI*, the general asymptotic form of the integrator transfer function, we obtain the following formula for the digital system transfer function for sinusoidal inputs:

(3.43)

3.9

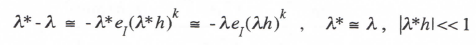

Replacing jω with λ* and solving for 1/H*, we obtain

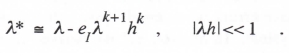

(3.44)

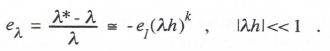

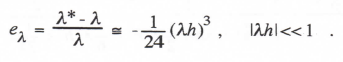

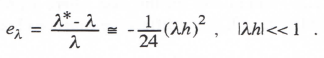

Setting the right side of Eq. (3.44) equal to zero and solving for λ* determines the value of λ* which makes the denominator of H*(eλ*h) vanish, i.e., the equivalent characteristic root of the digital system. Thus

and

(3.45)

Eq. (3.45) represents an asymptotic formula for the fractional error in characteristic root for any single-pass or equivalent single-pass algorithm, where eI is the integrator transfer-function error coefficient for the specific algorithm. We have already seen for Euler integration that k = 1 and eI = 1/2. In this case Eq. (3.48) yields eλ – λh/2, which is precisely the result we derived earlier in Eq. (3.7). For AB-2 integration we found that k = 2 and eI = 5/12. From Eq. (3.48) it follows that – (5/12)(λh)2 is the asymptotic formula for eλ , the fractional error in characteristic root when using the AB-2 algorithm.

It should be noted that Eq. (3.45) is not valid for multiple-pass integration algorithms where the intermediate passes used to obtain the states for the final pass are of order less than k. Thus Eq. (3.48) is not valid for the Runge-Kutta methods. On the other hand it is valid for the two-pass Adams-Moulton algorithms introduced in Chapter 1.

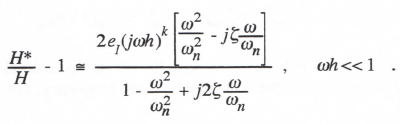

From Eq. (3.43) it is easy to obtain the asymptotic formula for the fractional error in sinusoidal transfer function. If we factor jω – λ from the denominator and note that 1/(jω – λ) = H(jω) , we can obtain the following formula:

(3.46)

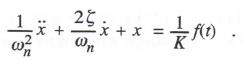

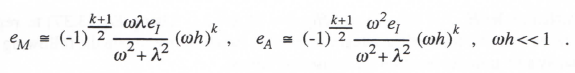

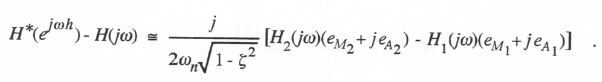

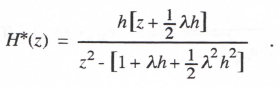

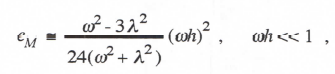

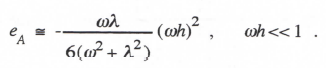

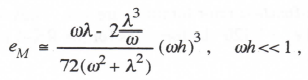

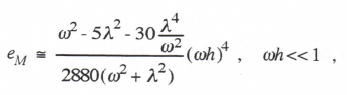

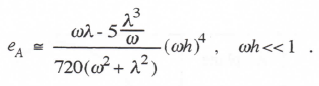

To obtain the separate real and imaginary parts of H*/H-1 we must rationalize the right side of Eq. (3.46). The result will depend on whether the order k of the integration algorithm is odd or even. We obtain the following formulas for eM, the fractional error in transfer-function gain (i.e., the real part of H*/H-1), and eA, the transfer function phase error (i.e., the imaginary part of H*/H-1) for k odd:

(3.47)

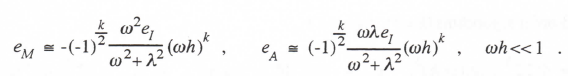

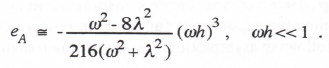

On the other hand for k even we have

3-10

(3.48)

For k = 1 and, eI = 1/2, eM and eA, as given by Eq. (3.47) agree with the asymptotic formulas derived earlier for Euler integration in Eqs. (3.11) and (3.12).

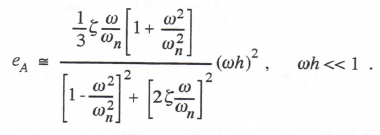

Thus far in this section we have developed general formulas for the characteristic root and transfer function errors when using single-pass integration methods to simulate first-order linear systems. We now derive general formulas for these same error measures when simulating second-order linear systems with complex roots. From Eq. (3.45) the formula for the digital system characteristic root λ* when using a kth-order integration algorithm with error coefficient eI is given by

(3.49)

With λ expressed in terms of ωn and ζ as in Eq. (3.16), Eq. (3.49) can be rewritten for any order k of integration method. To obtain the characteristic-root error coefficients in general for each k we follow the same procedure used to obtain Eqs. (3.19), (3.21), and (3.22) for Euler integration. Thus we note that

(3.50)

from which the approximate formula for eω the fractional error in root frequency , can be obtained. Next we note that

(3.51)

from which the approximate formulas for both ωn*, the undamped natural frequency of the digital root, and eωn, the fractional error in the undamped natural frequency, can be written. Finally, we note that

(3.52) ![]()

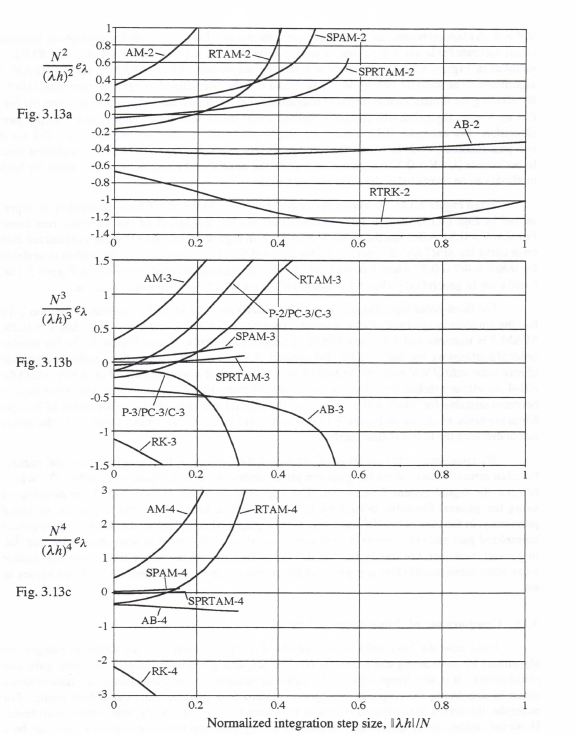

from which the approximate formula for eζ, the damping ratio error, can be written. In this way the following asymptotic formulas for eω , eωn and eζ. are obtained for k = 1, 2, 3, and 4:

First-order algorithms (k = 1), ωnh << 1

(3.53)

Second-order algorithms (k – 2), ωnh << 1,

(3.54)

3-11

Third-order algorithms (k = 3), ωnh << 1,

(3.55)

Fourth-order algorithms (k = 4), ωnh << 1,

(3.56)

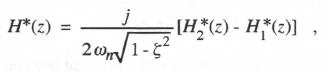

We next consider the derivation of the approximate formulas for sinusoidal transfer function gain and phase errors when single-pass integration algorithms are used to simulate a second-order linear system. In Section 3.2 we derived these formulas for Euler integration by substituting the asymptotic representation of the Euler integrator transfer function into the second-order continuous-system transfer function H(jω). We use the same procedure here by employing Eqs. (3.37) and (3.42), where H(jω) is given by Eq. (3.28). Thus

(3.57)

If we factor out ωn2 – ω2 + j2ζωnω from the denominator and set the factor equal to 1/H(jω), we can obtain the following formula for the approximate fractional error in digital system transfer function:

(3.58)

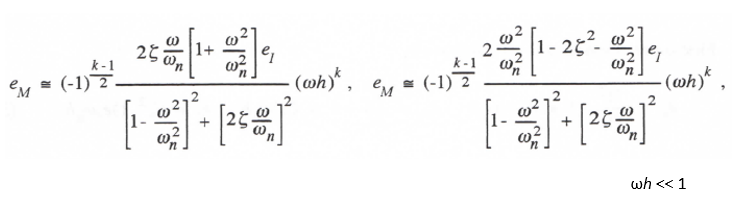

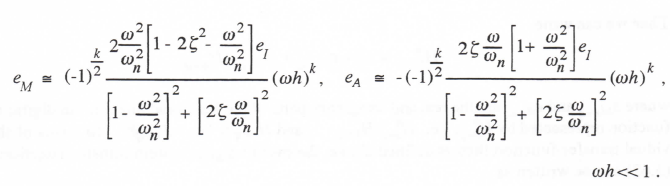

To obtain the separate real and imaginary parts of H*/H – 1 we must rationalize the right side of Eq. (3.58). The result will depend on whether the order k of the integration algorithm is odd or even. The following formulas are obtained for the fractional gain error eM and the phase error eA of the transfer function when k is odd:

(3.59)

For even k the formulas are

3-12

(3.60)

Thus far in this section we have derived formulas for transfer-function gain and phase errors for single-pass (or equivalent single-pass) integration algorithms in the simulation of first and second-order linear subsystems. It turns out that the transfer function gain and phase errors in simulating any order of linear system are equal approximately to the sum of the gain and phase errors of the individual subsystems which, when connected in series, represent the overall system. In particular, let the overall transfer function of the continuous linear system be given by

(3.61)

Here λ1, λ2, … , λn represent the n poles of H(s), i.e., the characteristic roots of the linear

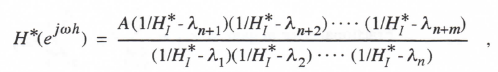

system, and λn+1, λn+2, … , λn+m represent the m zeros of H(s), where m ≤ n. From Eq. (3.42) we can write the following formula for the digital system transfer function for sinusoidal inputs:

(3.62)

where HI* is the sinusoidal transfer function of the integrator used in the digital simulation of the system.

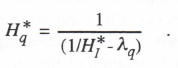

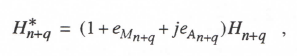

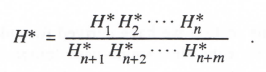

Consider next the factor (1/HI* – λq) in the denominator of Eq. (3.62). Let Hq* represent the reciprocal of this factor, i.e.,

(3.63)

If we denote eMq and eAq, as the real and imaginary parts of the fractional error in the digital transfer function represented by Hq*, i.e., Hq* / Hq – 1 i.e., as defined in Eq. (1.51), then Hq* can be represented as

(3.64) ![]()

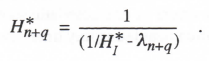

Here Hq = (jω – λq)-1, the sinusoidal transfer function for the factor (s – λq)-1 in Eq. (3.61). Next, consider the factor (1/HI* – λn+q) in the numerator of Eq. (3.62). Let H*n+q be the transfer function representing the reciprocal of this factor, i.e.,

(3.65)

3.13

Then we can write

(3.66)

where eMn+q and eAn+q are the real and imaginary parts of the fractional error in the digital transfer function represented by H*n+q, i.e., H*n+q/Hn+q – 1 and Hn+q = (jω – λn+q)-1. In terms of the individual transfer-function factors defined above, the overall digital system transfer function of Eq. (3.62) can be written as

(3.67)

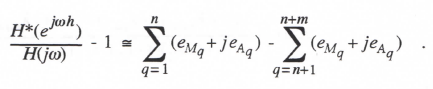

Substituting Eqs. (3.64) and (3.66) for the individual transfer-function factors on the right side of Eq. (3.67) and neglecting terms above first order in the errors eMq and eAq, we obtain the following formula for the fractional error in the overall digital system transfer function:

(3.68)

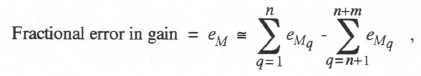

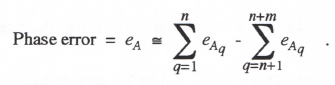

Comparison with Eqs. (1.53) and (1.54) yields the formulas shown below for the fractional error in gain and the phase error, respectively, of the overall digital system transfer function.

(3.69)

(3.70)

Eqs. (3.69) and (3.70) are completely general and quite useful. They state that the transfer-function gain and phase errors in digital simulation of any order of linear system when using a single-pass (or equivalent single-pass) integration algorithm are equal to the sum of the gain and phase errors, respectively, in simulation of the individual pole and zero factors in the transfer function. For eMq and eAq in Eqs. (3.69) and (3.70) we have developed simple asymptotic formulas in terms of the integrator error coefficient, e1, for factors with real poles or zeros in Eqs. (3.47) and (3.48), and for factors with complex poles or zeros in Eqs. (3.59) and (3.60).

3.4 Adams-Bashforth Predictor Algorithms

In the next several sections we consider a number of well known single pass (or equivalent single-pass) integration methods. For each method we determine the integrator error coefficient e1 which, along with the order k of the algorithm, allows us to use the formulas developed in the previous section to determine errors in characteristic roots and errors in the gain and phase of the sinusoidal transfer function when simulating any time-invarient linear system.

3-14

First we consider Adams-Bashforth methods up to 4th order. Euler integration could be called AB-1, since it is first-order (k = 1) and is based on a zero-order extrapolation of the state-variable derivative over the integration step h. We have seen that e1 = 1/2 for Euler integration; this means that the fractional error in characteristic root will be – λh/2, as is evident in Eq. (3.7). It also means that the transfer-function errors will be proportional to ωh/2, as is evident in Eqs. (3.11) and (3.12).

AB-2 integration, represented in Eq. (3.32), is based on the area under a first-order extrapolation from the current and past state-variable derivatives. We have seen that e1 = 5/12 and k = 2 for AB-2 integration. This means that characteristic root errors will be proportional to (5/12)(λh)2 and transfer function errors will be proportional to (5/12)(ωh)2.

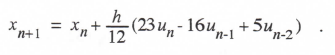

AB-3 integration, represented in Eq. (1.16), is based on the area under a quadratic extrapolation from the current and past two state-variable derivatives. For integration of the equation dx/dt = u(t), this results in the following difference equation:

(3.71)

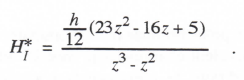

Taking the Z transform and solving for X*/U*, we obtain the AB-3 integrator Z transform. Thus

(3.72)

The AB-3 integrator transfer function for sinusoidal inputs is simply HI*(ejωh), which takes the following asymptotic form when the exponential functions are replaced by power series with terms retained up to order h4:

(3.73)

Comparison with Eq. (337) shows that eI = 3/8 and k = 3 for AB-3 integration.

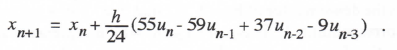

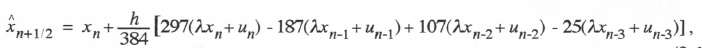

AB-4 integration, represented by Eq. (1.18), is based on the area under a cubic extrapolation using the current and past three state-variable derivatives. Integration of the equation dx/dt = u(t) leads to the following difference equation:

(3.74)

Taking the Z transform and solving for X*/U*, we obtain the AB-4 integrator Z transform. Thus

(3.75)

3-15

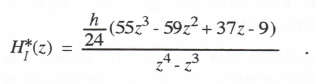

The AB-4 integrator transfer function for sinusoidal inputs is simply HI*(ejωh), which takes the following asymptotic form when the exponential functions are replaced by power series with terms retained up to order h5:

(3.76)

Comparison with Eq. (3.37) shows that eI = 251/720 and k = 4 for AB-4 integration.

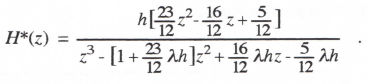

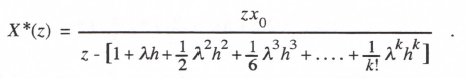

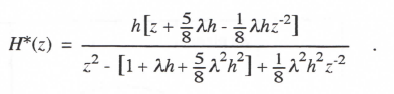

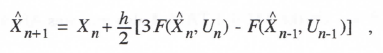

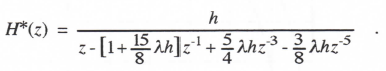

Before considering any additional single-pass methods, let us discuss the extraneous roots which result from the AB predictor methods. Assume we use AB-2, for example, to solve the first-order linear system of Eq. (3.1), which has the transfer function H(s) = 1/(s – λ). According to Eq. (3.41) we can write the Z transform of the resulting digital system by simply replacing s in H(s) with 1/HI*, where HI* for AB-2 integration is given by Eq. (3.34). In this way we obtain

(3.77)

We note that H*(z) has two poles because the denominator is a quadratic in z. One of these poles, z1, corresponds to an equivalent characteristic root λ* which will almost equal the ideal root λ when |λh| << 1. The second pole, z2, corresponds to a second characteristic root, which is extraneous. In solving Eq. (3.1) with AB-2 integration, the second root results from the dependence of the state-variable derivative, f = dx/dt = λx + u(t), on the state x. Since the AB-2 algorithm uses fn-1 as well as fn, in this case the next state xn+1 will depend not only on xn but also xn-1. Thus both xn and xn-1 become states and a second characteristic root is introduced. For small integration step sizes (|λh| << 1) it can be shown that z2 λh/2 and therefore corresponds to a transient that decays rapidly in comparison to eλt for λ negative real

.

In Chapter 2, Figure 2.3, we observed that whenever a pole of H*(z) lies outside the unit circle in the z plane, i.e., exceeds unity in magnitude, the corresponding characteristic root λ* will have a positive real part, leading to an unstable transient. When a pole of H*(z) lies on the unit circle, the system is neutrally stable. Thus all the poles of H*(z) must lie inside the unit circle in the z plane for the digital system to be stable. If Eq. (3.1) is to represent a stable continuous system, then λ < 0 for real λ and the dimensionless step size λh is negative. For λh = -1 it is apparent from the denominator of Eq. (3.77) that z = -1 is a pole of H*(z). From Figure 2.3 we see that the corresponding λ* is equal to jπ/h, which represents an undamped oscillation at one-half the integration frame rate1/h. This causes a neutrally stable transient that results from the extraneous root. For any larger integration step size (λh < -1) the extraneous root causes an unstable transient and the solution diverges, even though the first pole, z1, corresponding to the principal root, represents a stable solution.

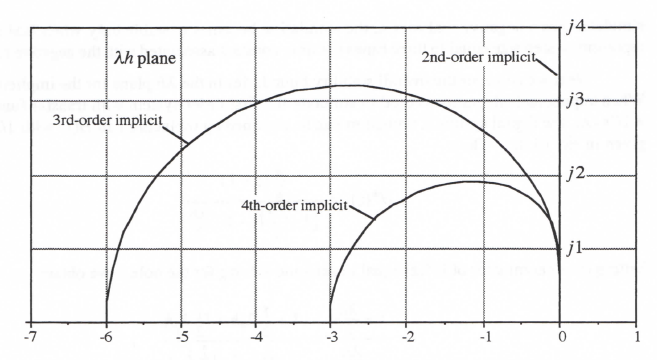

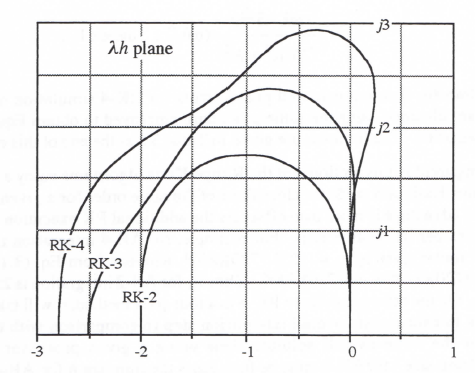

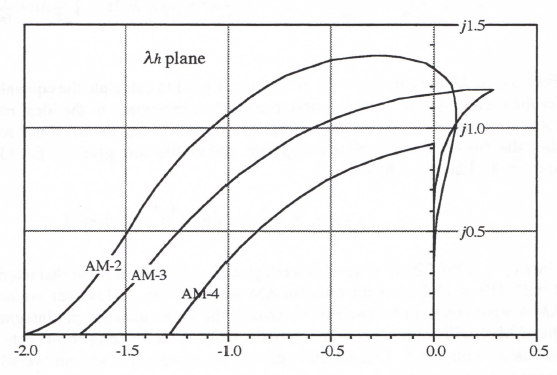

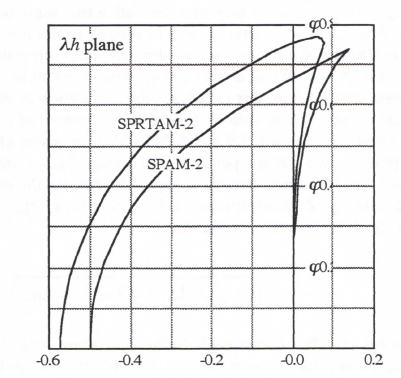

For AB-2 simulation of a second-order system there will be two extraneous roots in addition to the two principal roots. The locus of the dimensionless step values λh for which a pole of H*(z) lies on the unit circle can be calculated by setting the denominator of Eq. (3.77) equal to zero with |z| = 1 and solving for λh. In particular, we let z = ejθ and vary the polar angle θ between

3-16

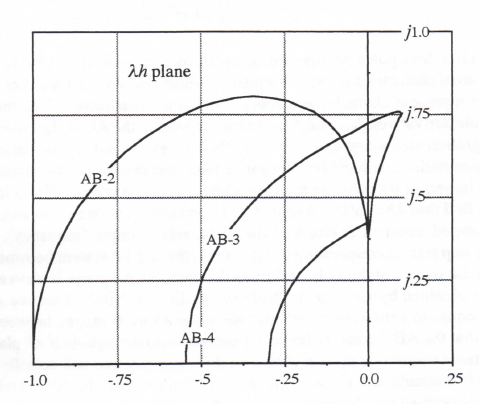

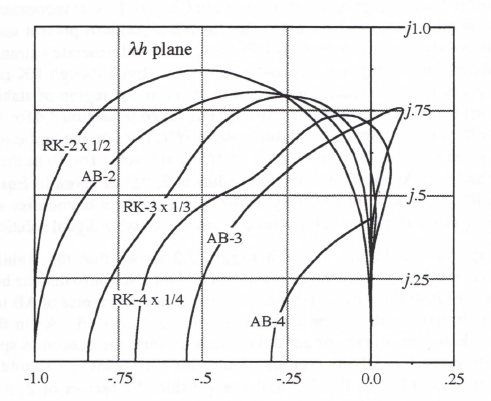

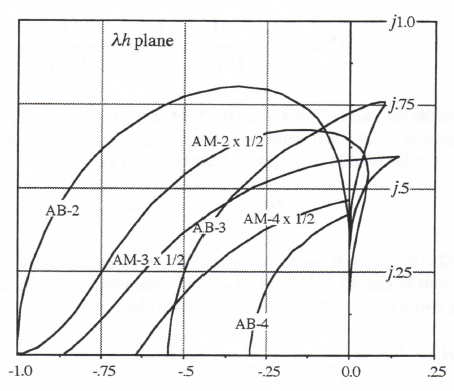

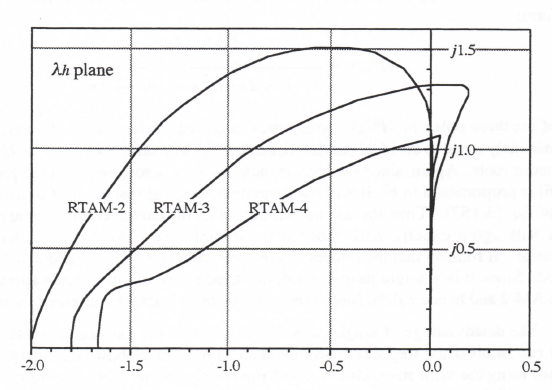

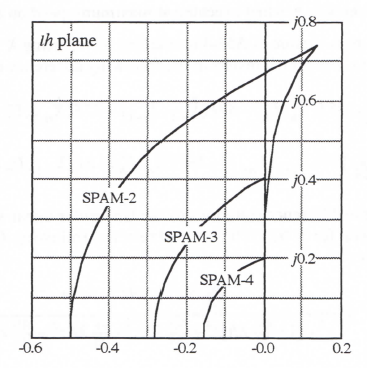

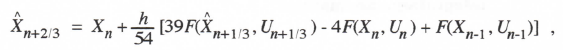

-π and π. This generates the plot shown for AB-2 in Figure 3.2. Also shown in the figure are plots of the stability boundaries for AB-3 and AB-4 integration. Only the upper half of each stability boundary is shown, since all plots will be symmetric with respect to the real axis. For all characteristic roots λ and step sizes h such that the product λh remains inside the AB-2 boundary shown in the figure, a digital system that uses AB-2 integration will be stable. For λh values lying outside the boundary, the digital system will be unstable. The frequency of oscillation is generally less than one-half the integration frame rate 1/h. The one exception, noted above, is when λh = -1, in which case the digital system exhibits neutral stability at one-half the sample frequency (frame rate), 1/h, when AB-2 integration is used.

Figure 3.2. Stability boundaries for Adams-Bashforth integration.

Note in Figure 3.2 that the stability boundary for AB-2 integration lies almost directly on the imaginary axis when (λh)imag <0.3. It follows that if the system being simulated has a root λ on the imaginary axis with a magnitude |λ| < 0.3/h, which corresponds to an undamped transient, the AB-2 simulation will also be very close to neutrally stable, although it will exhibit a small amount of negative damping. This is consistent with the result derived earlier in Eq. (3.54) for the damping ratio error associated with integration methods of order k = 2. Eq. (3.54) shows that when ζ = 0, as is the case in simulating a system with λ on the imaginary axis, the damping ratio error, eζ, is zero to order h2. However, there will in general be a damping ratio error of order h3, and it will be negative in the case of AB-2 integration. We know this because the AB-3 stability boundary falls slightly to the left of the imaginary axis in the vicinity of the origin in Figure 3.2. This means that for values of λ lying exactly on the imaginary axis and therefore outside the AB-2 stability boundary, the simulation will be slightly unstable (negative damping ratio). Thus Eq. (3.54) shows that when integration methods of order k = 2, for example AB-2 integration, are used to simulate an undamped second-order system, the frequency error (of order h2 for ζ = 0) will

3-17

predominate. On the other hand, Eq. (3.53) shows that when integration methods of order k = 1, for example Euler integration, are used to simulate an undamped second-order system, the

damping-ratio error (of order h for ζ = 0) will predominate.

To obtain the stability boundary in the case of AB-3 integration, we first write the digital system Z transform that results when AB-3 is used to simulate a first-order system with characteristic root λ. Thus we replace s in the transfer function 1/(s – λ) with 1/HI*, where HI* for AB-3 integration is given by Eq. (3.72). In this way we obtain

(3.78)

Here H*(z) has three poles because the denominator is a cubic in z. One of the poles corresponds to an equivalent characteristic root λ* which is almost equal to the ideal root λ, when |λh| << 1. The other poles represent characteristic roots which are extraneous. They result from the past two state-variable derivatives, fn-1 and fn-2, which are used in the AB-3 algorithm in addition to fn. For small integration step sizes, i.e., |λh| << 1, the two extraneous roots cause transients that decay rapidly in comparison with eλt for λ negative real. But they can cause instability as the integration step h gets larger. If we consider the case where z = -1 is a pole of H*(z) in Eq. (3.78) and solve for λh we find that λh = -6/11. Again, z = -1 corresponds to one of the extraneous roots and leads to an undamped transient at one-half the frame rate (sample frequency), 1/h. For any larger integration step size, corresponding to λh < -6/11, the digital system becomes unstable. For AB-3 integration the overall stability boundary in the complex λh plane is shown in Figure 3.2. This boundary is obtained by the same method used earlier for AB-2. Thus we set the denominator of Eq. (3.78) equal to zero with z = ejθ and solve for λh as θ ranges between –π and π. Note in Figure 3.2 that the AB-3 stability boundary projects into the right-half λh plane. This means that a neutrally-stable continuous system with λ on the imaginary axis will actually result in a stable (i.e., damped) AB-3 simulation, since λh in this case falls within the AB-3 stability boundary. This conclusion is verified by reference to Eq. (3.55), which shows that for ζ = 0 and eI positive (eI = 3/8 for AB-3 Integration), the damping ratio ζ* of the digital simulation will be positive. Note also from Eq. (3.55) that for third-order integration algorithms (i.e., k = 3), the damping ratio error is of order h3 will predominant compared with the frequency error, which is of order h4 when ζ = 0.

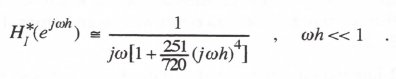

To obtain the stability boundary in the case of AB-4 integration, we write the digital system Z transform that results when AB-4 is used to simulate the first-order linear system with characteristic root λ. In this case we obtain H*(z) by replacing s in the transfer function 1/(s – λ) with 1/HI*, where HI* for AB-4 integration is given by Eq. (3.75). In this way we obtain

(3.79)

Here we see that H*(z) has four poles, three of which are extraneous. They result from the past three state-variable derivatives, fn-1, fn-2 and fn-3, which are used in the AB-4 algorithm in addition to fn. Again, for small integration step sizes, i.e., |λh| << 1, the three extraneous roots correspond to transients that decay rapidly in comparison with eλt for λ negative real. But they can cause

3-18

instability as the integration step h gets larger. The overall stability boundary in Figure 3.2 for AB-4 integration is obtained by the same method used earlier for AB-2 and AB-3. Thus we set the denominator of Eq. (3.79) equal to zero with z = ejθ and solve for λh as θ ranges between –π and π If we set the denominator equal to zero with z = -1 (this corresponds to θ = π in z = ejθ) and solve for λh, we find that λh = -3/10. Thus AB-4 integration becomes unstable when the step size h is larger than -0.3/λ (we recall that λ must be negative for the continuous system to be stable). For h = -3/λ the digital system will be neutrally stable with an undamped transient at one-half the sample frequency due to the extraneous pole at z = -1. For this same step size, which is only 30 percent of the continuous-system time constant, -1/λ, the principal root λ* of the AB-4 simulation is within one percent of the ideal root λ!

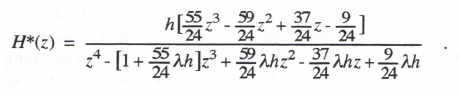

Figure 3.3 illustrates the effect of the unstable extraneous root when the integrator step size is too large. Shown in the figure is the response of a first-order linear system to a unit step input as computed using AB-4 integration with an integrator step size h = 0.36. Since the system being simulated has a unit time constant, λ = -1 and λh = -0.36. Thus λh is outside the AB-4 stability boundary in Figure 3.2. The resulting instability is very apparent in Figure 3.3. At the same time we note that the AB-4 solution is very close to the exact solution until such time as the small initial transient associated with the unstable extraneous root grows to significant amplitude.

Figure 3.3. Unit step response of first-order system when simulated using AB-4 integration; the instability caused by one of the extraneous roots is evident.

Note in Figure 3.2 that the right side of the stability boundary for AB-4 integration appears to lie directly on the imaginary axis. In fact, this is very nearly true, which means that the damping ratio in simulating an undamped second-order system using AB-4 integration will be very nearly

3-19

equal to the ideal value of zero. In fact, reference to Eq. (3.56) for the damping ratio error eζ associated with integration methods of order k = 4 shows that the error is zero to order h4. The actual ζ* for ζ = 0 is of order h5, whereas the frequency error eω, is of order h4. In summary, for the simulation of undamped second-order systems Eqs. (3.53) through (3.56) show that the frequency error eω predominates when using integration algorithms of even order, and the damping ratio error eζ predominates when using integration algorithms of odd order.

From Figure 3.2 it is clear that the higher the order of the predictor integration method, the more restrictive is the upper limit on integration step size based on stability considerations. For real-time simulation, where dynamic accuracy requirements are often modest (0.1 to 1 percent), it is evident that AB-4 is unlikely to be a strong candidate algorithm due to instability considerations. This is especially true in simulating stiff systems, such as those containing controller subsystems with very short time constants. For adequate stability margin the AB-4 method requires the order of four integration steps per smallest time constant in the system being simulated. It is likely that such a small step size will produce a much more accurate solution than is needed. Yet any attempt to speed up the solution with even a modest increase in step size will lead to instability due to an extraneous root. In this case a lower order predictor method will give better results.

From the above discussion it is clear that the higher order AB methods should be used with some caution. However, if the characteristic roots of the linearized system are known and, in particular, the roots of largest magnitude are known, then the maximum safe step size can be estimated from the stability boundaries. Alternatively, the computer simulation can actually be run and the largest allowable step size determined experimentally.

Another disadvantage of the AB predictor methods is the startup problem. This results from the need to know past state-variable derivatives at t = 0. These past values can be determined by integrating backwards the required number of steps from t = 0, using an integration method such as Runge-Kutta which has no startup problem. This was in fact the way in which the AB-4 solution shown in Figure 3.3 was produced. A simpler but less accurate startup method is to use Euler integration for the first step, AB-2 integration for the second, AB-3 for the third, etc., until the final desired order of AB algorithm is attained. Subsequent integration steps use that algorithm. In many simulations the small transient startup errors introduced by this scheme are unimportant. It is not an appropriate startup method, however, in solving two-point boundary value problems.

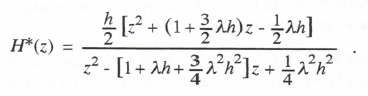

3.5 Implicit Integration Algorithms

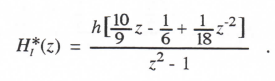

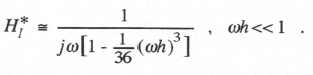

In this section we consider the three specific implicit integration methods that form the basis for the Adams-Moulton explicit predictor-corrector algorithms of order two, three, and four. We have already introduced the formulas for these implicit methods in Section 1.2 of Chapter 1. Here we will determine the integrator error coefficient, eI, as defined by Eq. (3.37), for each method. This will, in turn, let us apply the formulas developed in Section 3.3 for the characteristic root and transfer function errors. Note that these implicit methods can be converted into explicit, single-pass methods in the simulation of linear systems, as will be described in Chapter 6.

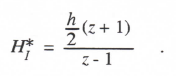

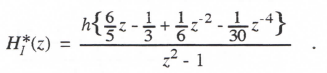

The first of the three implicit methods is trapezoidal integration, given earlier in Eq. (1.8). When used to solve the first-order equation dx/dt = u(t), the difference equation becomes

3-20

(3.80)

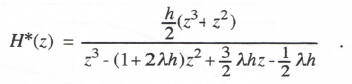

Taking the Z transform and solving for HI*, we obtain

(3.81)

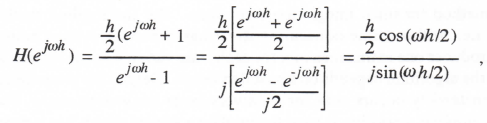

Replacing z with ejωh in Eq. (3.81), we obtain HI* (ejωh), the trapezoidal integrator transfer function for sinusoidal inputs. Thus

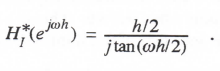

or

(3.82)

Noting that tan y = y + 3/3 + … , we can rewrite Eq. (3.82) as

(3.83)

Comparison with Eq. (3.37) shows that the integrator error coefficient eI = -1/12 and k = 2. Earlier, based on Eq. (3.36), we determined that eI = 5/12 for AB-2 integration. Thus it is clear that trapezoidal integration is five times more accurate than AB-2 integration for small integration step sizes. From Section 3.3 we know that this accuracy comparison applies as well to characteristic root errors and transfer function gain and phase errors. The trapezoidal method has the further advantage that it does not introduce any extraneous roots. However, it cannot be used directly as a single-pass method in the solution of nonlinear differential equations because of the implicit nature of the algorithm.

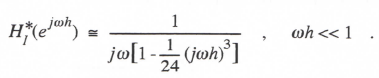

In Eq. (1.15) we introduced a third-order implicit algorithm, which takes the following form in integrating the equation dx/dt = u(t):

(3.84)

Taking the Z transform and solving for HI*, we obtain

(3.85)

3-21

Again, the integrator transfer function for sinusoidal inputs is HI*(ejωh), which has the following asymptotic form when the exponential functions are expanded in power series and terms to order h4 are retained:

(3.86)

Comparison with Eq. (3.37) shows that the integrator error coefficient eI = -1/24 and k = 3 for this third-order implicit integration algorithm. On the other hand Eq. (3.73) shows that eI = 3/8 for AB-3 integration. Thus the third-order implicit method is nine times more accurate than the third-order predictor method for small integration step sizes. But the implicit method can only be used explicitly, i.e., without time-consuming iterations, when the system being simulated is linear. Also, it introduces one extraneous root for each state variable in the system being simulated. This is because the algorithm depends on a past derivative. Despite the presence of the extraneous root, however, instability occurs only for relatively large integration steps. For example, when the third-order implicit algorithm is used to simulate a system which has a negative real root λ, the simulation becomes unstable only when |λh|>6. This is a very large step indeed, and should not be a significant deterrent in the selection of this third-order method.

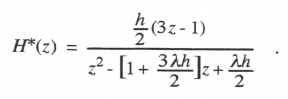

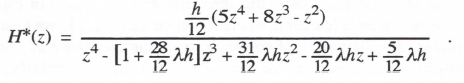

Finally, we consider the fourth-order implicit algorithm given in Eq. (1.17). When used to solve the equation dx/dt = u(t), it takes the form

(3.87) ![]()

Taking the Z transform and solving for HI* we obtain

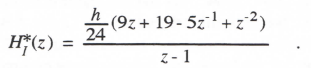

(3.88)

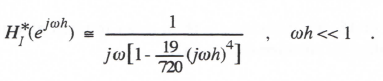

As noted previously, the integrator transfer function for sinusoidal inputs is HI*(ejωh). When the exponential functions are expanded in power series and terms to order h5 are retained, the following asymptotic formula for the integrator transfer function is obtained:

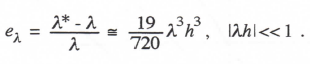

(3.89)

Comparison with Eq. (3.37) shows that the integrator error coefficient eI = -19/720 and k = 4 for this fourth-order implicit integration algorithm. For AB-4 integration we found in Eq. (3.76) that eI = 251/720. Thus the fourth-order implicit method is 251/19 or 13.2 times more accurate than the AB-4 predictor method for small integration step sizes. Again, the implicit method can only be used as an explicit algorithm in the simulation of linear systems. Also, it introduces two extraneous roots for each state variable in the system being simulated. This is because the algorithm depends on two past derivatives. These extraneous roots do not, however, cause significant stability problems for large integration steps. For example, when the system being

3-22

simulated has a negative real root λ, the simulation becomes unstable only when |λh| > 3. This represents a step size equal to three times the time constant associated with the negative real root.

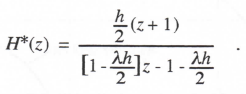

Next we consider the overall stability boundaries in the λh plane for the implicit methods. When trapezoidal integration is used to simulate the first-order system with transfer function H(s) = 1/(s – λ), the digital system Z transform can be obtained by replacing s in H(s) with 1/HI*(z), as given in Eq. (3.81). Thus

(3.90)

Setting the denominator of H*(z) equal to zero and solving for the pole z, we obtain

(3.91)

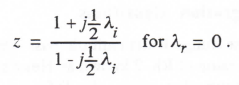

Where λ = λr + jλi. For the case where the characteristic root λ lies on the imaginary axis in the λh plane, λr = 0 and Eq. (3.91) becomes

It follows for λr = 0 that |z| = 1, which means that the unit circle in the z plane corresponds to the imaginary axis in the λh plane. Thus the stability boundary for trapezoidal integration is the imaginary axis in the λh plane, and the stable region consists of the entire left-half plane. We conclude that when trapezoidal integration is used to simulate a stable linear system (λ in the left-half plane), the resulting simulation will be stable regardless of the step size h.

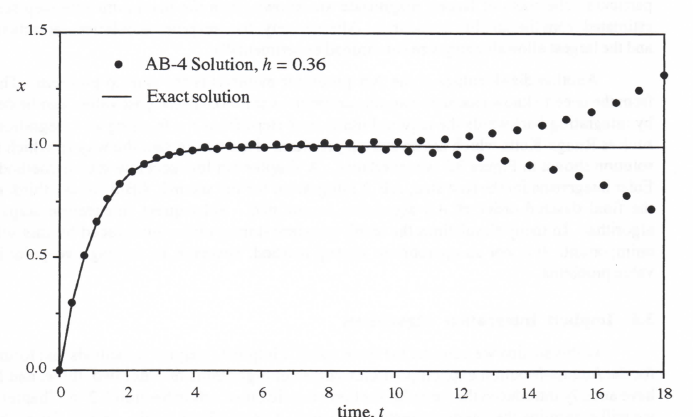

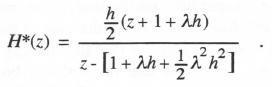

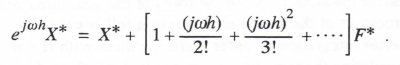

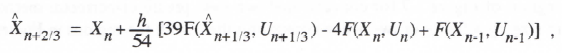

To obtain the stability boundaries in the λh plane for third and fourth-order implicit integration, we follow the procedure used earlier for the AB methods. In this way we obtain the stability boundaries shown in Figure 3.4. As noted above, the stability boundary for the second-order implicit method, i.e., trapezoidal integration, is the imaginary axis, with the stability region consisting of the entire left-half plane. As in the case of the stability regions for AB integration shown in Figure 3.2, we note in Figure 3.4 that the stability region in the λh plane shrinks as the algorithm order increases. Note also that the stability boundary for the fourth-order implicit method approaches the imaginary axis more closely than the boundary for the third-order method. This means that the damping ratio error in simulating either an undamped or lightly-damped second-order system will be less for the method of order four. Again this is consistant with Eqs. (3.55) and (3.56), which show that when the integration algorithm order k = 4 and ζ = 0 for the continuous system, the damping ratio error eζ is zero to order h4 and the frequency error predominates. Conversely, when the algorithm order k = 3 and ζ = 0 for the continuous system, the damping ratio error is finite to order h3 and predominates over the frequency error, which is zero to order h3. As noted earlier, the above conclusions hold for all single pass (or equivalent single-pass) integration methods.

3-23

Figure 3.4 Stability boundaries for implicit integration methods.

3.6 Runge-Kutta Integration Algorithms

Next we consider the Runge-Kutta multiple-pass integration methods introduced in Section 1.2. We consider first a version of RK-2 known as Heun’s method, which from Eqs. (1.25) and (1.26) takes the following form when solving the differential equation given by Eq. (3.1):

(3.92) ![]()

(3.93)

Here n+1 is an estimate of xn+1 based on Euler integration. Taking the Z transform of Eqs. (3.92) and (3.93) with x0 = 0 and solving for H*(z) = X*(z)/U*(z), we obtain

(3.94)

As noted in Eq. (2.22), the pole of H*(z), z1 = 1 + λh + λ2h2/2, is related to the equivalent characteristic root, λ*, by the formula z1 = eλ*h. The asymptotic formula for λ* for |λh| << 1 can be found with the same procedure used earlier in Sections 3.2 and 3.3. Thus we set the denominator of H*(z) equal to zero with z replaced by eλ*h, expand the exponential function in a power series with terms retained up to order h3, and solve for λ*. In this way we obtain

(3.95)

3-24

This equation is valid for both real and complex λ. The fractional error in the characteristic root, eλ, is given by

(3.96)

As expected for RK-2 integration, the error varies as the square of the integration step size h. Comparison of Eq. (3.96) with the result in Eq. (3.45) for single-pass integration methods suggests that eI = 1/6 for RK-2 integration. Although the general single-pass transfer-function error formulas derived in Section 3.3 are not valid for the multiple-pass RK methods considered in this section, as we shall confirm shortly, the characteristic-root error formulas are valid. The reason for this will become apparent in the next section when we derive H*(z) for digital simulation of the first-order equation dx/dt = λx – u(t) using power-series integration.

Since eI = 5/12 for AB-2 integration, it follows that the AB-2 root error is 2.5 times the root error of RK-2. On the other hand, the RK-2 algorithm requires two passes through the state equations per integration step. This means that RK-2 will in general take about twice as long to execute each integration step compared with AB-2. This results in double the step size for a given overall computation time and hence four times the root error, since both methods are second order. The net effect is to make the AB-2 root error 2.5/4 or 0.625 times the error when using RK-2 integration.

We next consider the transfer function for sinusoidal inputs, H*(ejωh). This is obtained by substituting ejωh for z in Eq. (3.94). Expanding ejωh in power series and retaining terms up to order h2 in the numerator and h3 in the denominator of H*, we can derive the following approximate formula for the fractional error in the transfer function:

or

(3.97)

Again we note from Eqs. (1.53) and (1.54) that the real and imaginary parts of the right side of Eq. (3.97) are equal, respectively, to the approximate fractional gain error and phase error of the digital transfer function for sinusoidal inputs. Thus

(3.98)

(3.99)

As expected, the transfer function gain and phase errors in RK-4 simulation of a first-order system vary as the square of the integration step size h. Note also that the result in Eq. (3.98) is different

3-25

from the result for eM in Eq. (3.48) for eI = 1/6 and k = 2. This confirms that the general transfer function error formulas for single-pass integration methods cannot be used for RK-2 integration.

Next we consider the errors in RK-2 simulation of an underdamped second-order linear system. Again we can use Eq. (3.95) for the characteristic root, λ*, with the complex λ given in terms of the undamped natural frequency ωn and damping ratio ζ by Eq. (3.16). As noted above, for the characteristic root errors we can use for RK-2 integration the general result already derived in Eq. (3.54) for a single-pass algorithm with eI = 1/6 and k = 2. Thus we find that

(3.100)

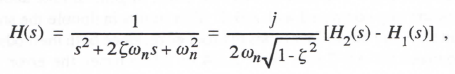

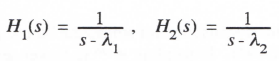

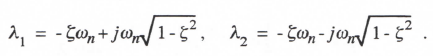

In considering the transfer function errors when RK-2 integration is used to simulate the second-order system given by Eq. (3.27), we take advantage of the result already obtained in Eq. (3.58) when the system being simulated is first order with transfer function given by 1/(s – λ). In this case the transfer function H(s) for the second-order system can be written as

(3.101)

where

(3.102)

and the characteristic roots λ1 and λ2 are given by

(3.103)

From Eq. (3.101) it is clear that we can represent the Z transform of a digital system that is simulating an underdamped second-order system in terms of the sum of the Z transforms for two first-order simulations. Thus

(3.104)

where HI*(z) and H2*(z) are the Z transforms for the digital simulations of H1(s) and H2(s), respectively, as defined in Eq. (3.102). It follows that the error, H*(ejωh) – H(jω), in the digital transfer function for sinusoidal inputs can be written in terms of the digital transfer function errors for the first-order subsystems. In particular, if we let λ = λ1 in Eqs. (3.98) and (3.99), it follows that H1*(ejωh) – H1(jω) H1(jω)(eM1+jeA1), where eM1 and eA1 represent the gain and phase errors, respectively, of H1*. Similarly, with λ = λ2 in Eqs. (3.98) and (3.99), it follows that H2*(ejωh) – H2(jω) H2(jω)(eM2 + jeA2), where eM2 and eA2 represent the gain and phase errors, respectively, of H2*. Then from Eq. (3.104) we can write the following formula for H*(ejωh) – H(jω):

3-26

(3.105) (3.105) |

Eq. (3.105) is then divided by H(jω) to obtain H*/H – 1, the fractional error in digital transfer function. This then leads directly to the following formulas for the real and imaginary parts of H*/H – 1 i.e., the fractional error in transfer function gain, eM, and the phase error, eA, when RK-2 integration is used to simulate a second-order system:

(3.106)

(3.107)

Note that the result in Eqs. (3.106) is different from the result for eM in Eq. (3.60) for eI = 1/6 and k = 2. Again, this shows that the general transfer function error formulas for single-pass integration methods cannot be used for RK-2 integration.

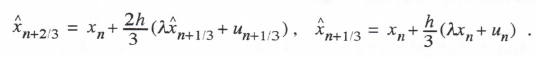

Before considering RK integration methods of higher order, we examine the real-time RK-2 algorithm introduced in Chapter 1. Like conventional RK-2 integration, real-time RK-2 is a two-pass method that employs Euler integration for the first pass. But the first pass is actually only a half step, i.e., it uses Euler integration to compute an estimate of the state halfway through the step. This estimate is then used to compute the derivative halfway through the step, which in turn is used in an Euler formula to compute the state at the end of the step. Applying the real-time RK-2 method, as defined in Eqs. (1.27) and (1.28), to the first-order system given by Eq. (3.1) leads to the following difference equations:

(3.108)

(3.109)

Using a sample period T = h/2, we can rewrite Eqs. (3.108) and (3.109) as the following single difference equation:

(3.110) ![]()

Taking the Z transform, replacing T with h/2, and solving for H*(z) = X*(z)/U*(z), we obtain

3-27

(3.111)

We determine the equivalent characteristic root λ* of the digital system by setting the denominator of Eq. (3.111) equal to zero with z = eλ*T = eλ*h/2. Then z2 = eλ*h and the denominator of H* in Eq. (3.111) becomes the same as the denominator of H* in Eq. (3.94) for the standard RK-2 with z = eλ*h. Thus λ* for real-time RK-2 is the same as λ* for standard RK-2 and the characteristic root errors given by Eqs. (3.96) and (3.100) apply for either RK-2 method.

To obtain the digital transfer function for sinusoidal inputs we replace z with ejωT = ejωh/2 in Eq. (3.111). Expanding the exponential functions in power series and retaining terms up to order h3, we derive the following asymptotic formulas for the transfer function fractional gain and phase errors:

(3.112)

(3.113)

Comparison with Eq. (3.98) for standard RK-2 shows that the gain error, eM, for real-time RK-2 is less than half as large. This improved accuracy results from the fact that un and un+1/2 serve as inputs for the nth integration frame in real-time RK-2 integration. This means that the input is sampled at twice the integration frame rate. In standard RK-2 the inputs for the nth integration frame are un and un+1, which represents an input sample rate equal to the integration frame rate.

The transfer function gain and phase errors for real-time RK-2 simulation of second-order linear subsystems are obtained using the same procedure employed to get Eqs. (3.106) and (3.107) for standard RK-2 integration. The formulas are given in Table 3.2 at the end of this chapter. Comparison with the formulas for standard RK-2 shows that the phase errors are identical. However, the gain error for real-time RK-2 is at least a factor of two smaller. Again, this can be ascribed to the doubled input sampling rate in the case of real-time RK-2.

In addition to providing better transfer-function gain accuracy, the real-time RK-2 method is compatible with real-time inputs, whereas standard RK-2 (Heun’s method) is not. As pointed out in Section 1.2, standard RK-2 requires the input un+1 at the start of the second pass, halfway through the nth integration frame, before un+1 is available in real time. Halfway through the nth integration step, real-time RK-2 only requires un + 1/2, which has just become available in real-time. From Eqs. (3.94) and (3.111) we see that the denominator of H*(z) is the same for both RK-2 methods when simulating the continuous system given by H(s) = 1/(s – λ). It then follows that the stability region in the λh plane will be identical for both methods. Later in this section we will examine the stability regions for all the RK algorithms considered here.

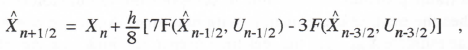

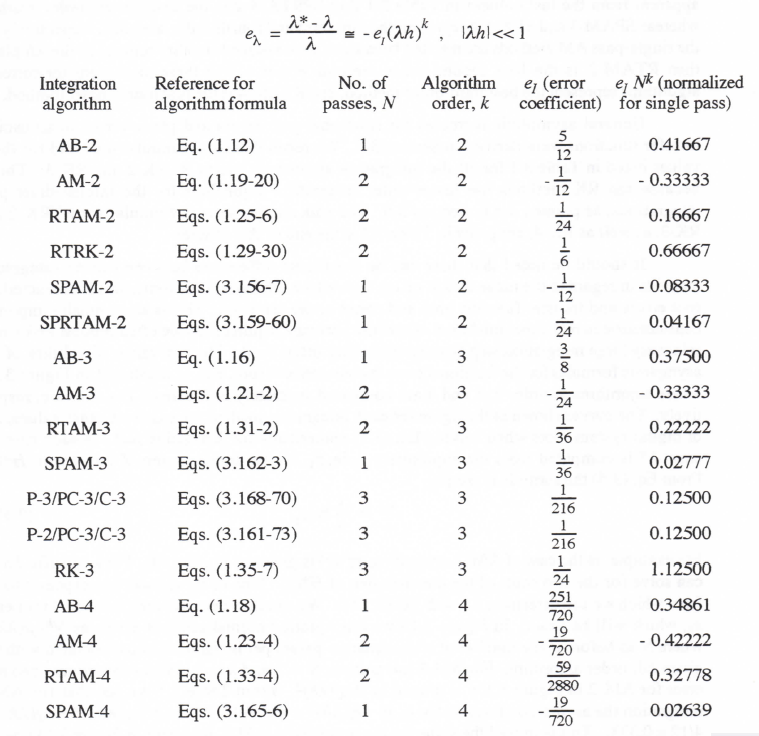

We now consider the RK-3 algorithm defined by Eqs. (1.35), (1.36) and (1.37) in Section 1.2. When applied to the first-order linear system described by Eq. (3.1), the method results in the following difference equations:

3-28

where

(3.114)

Using a sample period T = h/3, we can rewrite Eq. (3.114) as the following single difference equation:

(3.115)

Taking the Z transform, replacing T with h/3, and solving for H*(z) = X*(z) / U*(z), we obtain

(3.116)

We determine the equivalent characteristic root λ* by setting the denominator of H* equal to zero with z = eλ*T = eλ*h/3. Expanding the exponential functions in power series and retaining terms to order h4, we obtain the following asymptotic formula for the characteristic root error, eλ:

(3.117)

As expected for RK-3 integration, the error varies as the cube of the integration step size h. Comparison of Eq. (3.117) with the result in Eq. (3.45) for single-pass integration methods suggests that eI = 1/24 for RK-3 integration. Again we note that the general single-pass transfer-function error formulas derived in Section 33 are not valid for the multiple-pass RK methods, but the characteristic-root error formulas are valid. Thus we can immediately write from Eq. (3.55) with k = 3 and eI = 1/24 the formulas for the RK-3 root errors when the continuous system root λ is complex.

To obtain the digital transfer function for sinusoidal inputs we replace z with ejωT = ejωh/3 in Eq. (3.116). Expanding the exponential functions in power series and retaining terms up to order h4, we derive the following asymptotic formulas for the transfer function fractional gain and phase errors:

(3.118)

(3.119)

The transfer function gain and phase errors for RK-3 simulation of second-order linear subsystems are obtained using the same procedure employed to get Eqs. (3.106) and (3.107) for RK-2 integration. The formulas are given in Table 3.2 at the end of this chapter.

3-29

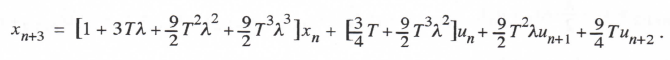

Finally, we consider the RK-4 integration algorithm defined by Eqs. (1.38) through (1.41) in Chapter 1. When applied to the first-order linear system described by Eq. (3.1), the method results in the following difference equations:

Where

(3.120)

Using a sample period T = h/2, we can rewrite Eq. (3.120) as the following single difference equation:

(3.121)

Taking the Z transform, replacing T with h/2, and solving for H*(z) = X*(z) /U*(z), we obtain

(3.122)

As before, we determine the equivalent characteristic root λ* by setting the denominator of H* equal to zero with z = eλ*T = eλ*h/2. Expanding the exponential functions in power series and retaining terms to order h5, we obtain the following asymptotic formula for the characteristic root error, eλ:

(3.123)

As expected for RK-4 integration, the error varies as the fourth-power of the integration step size h. Comparison of Eq. (3.123) with the result in Eq. (3.45) for single-pass integration methods suggests that eI = 1/120 for RK-4 integration. Once more we note that the general single-pass characteristic-root error formulas derived in Section 3.3 are valid for the multiple-pass RK methods, but the transfer-function error formulas are not. Thus we can immediately write from Eq. (3.56) with k = 4 and eI = 1/120 the formulas for the RK-4 root errors when the continuous system root λ is complex.

To obtain the digital transfer function for sinusoidal inputs we replace z with ejωT = ejωh/2 in Eq. (3.122). Expanding the exponential functions in power series and retaining terms up to order h5, we derive the following asymptotic formulas for the transfer function fractional gain and phase errors:

3-30

(3.124)

(3.125)

The transfer function gain and phase errors for RK-4 simulation of second-order linear subsystems are obtained using the same procedure employed to obtain Eqs. (3.106) and (3.107) for RK-2 integration. The formulas are given in Table 3.2 at the end of this chapter.

It is apparent in this section that the Runge-Kutta algorithms enjoy a significant advantage over the Adams-Bashforth predictor algorithms of the same order for a given integration step size. However, this advantage is more than offset by the additional RK execution time that results from multiple passes per integration step. For example, for AB-4 integration the fractional error in characteristic root is given by eλ – (251/(720)(λh)4, whereas from Eq. (3.123) for RK-4 we see that eλ-(1/(120)(λh)4 = -(6/(720)(λh)4 . Thus eλ for AB-2 integration is 251/6 times eλ for RK-4 integration. On the other hand, since RK-4 is a four-pass method, it will take approximately four times as long to execute one overall integration step in comparison with the single-pass AB-4 method. For the same overall solution time using a given processor this means that the mathematical step-size h for RK-4 must be four times the step size h for AB-4. Since eλ varies as h4 for both algorithms, the error in eλ for AB-4 will be 251/(6×44), or 251/1536) times eλ for RK-4. Thus AB-4 is in fact more accurate than RK-4 for a given computer speed.

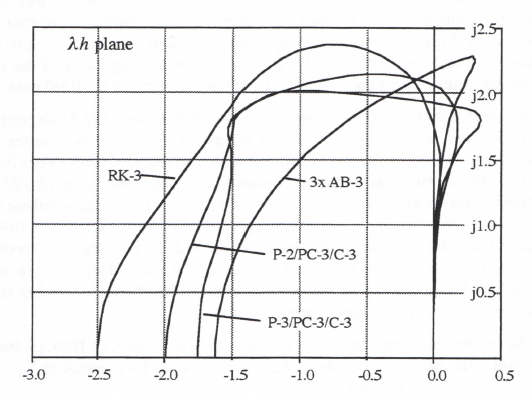

Another disadvantage of RK-4, as noted in Chapter 1, is its incompatibility with real-time inputs. On the other hand, we have noted that the AB algorithms present startup problems. They also perform poorly in response to discontinuous inputs and generate extraneous roots which can cause instabilities if the step size h is made too large. Even though RK methods introduce no extraneous roots, it is nevertheless important to examine the region of stability in the λh plane. This is accomplished by using the same method employed in Section 3.4 for AB integration. Thus we calculate the values of λh for which the pole of H*(z) lies on the unit circle in the z plane. In particular, we let z = ejθ in the denominator of H*(z) and solve for λh as the polar angle θ varies between –π and +π. Application of this procedure to H*(z) as given in Eqs. (3.94), (3.116) and (3.122) for RK-2, 3 and 4, respectively, leads to the stability boundaries shown in Figure 3.5. Values of λh lying within these boundaries correspond to stable digital solutions.

Comparison of Figure 3.5 with Figure 3.2 shows that the stability regions for RK integration are much larger than those for AB integration. Note also that the higher the order of the RK method, the larger the stability region. This is exactly the reverse of AB integration, where the stability region gets smaller as the algorithm order is increased. As in the case of accuracy comparisons, however, it is important to take into account the execution speeds of the different algorithms in comparing stability regions. From this viewpoint, we should reduce the stability regions for the multiple-pass RK-2, 3 and 4 algorithms by factors of 2, 3 and 4, respectively, when comparing them with the stability regions for the single-pass AB algorithms. This has been done in Figure 3.6, where the stability boundaries for AB integration are also shown. From the figure it is evident that the RK stability regions, even when normalized to account for multiple

3-31

passes, still are larger than the AB-2 regions. This is especially true in the case of 3rd and 4th-order algorithms.

Figure 3.5. Stability regions for Runge-Kutta integration algorithms.

Figure 3.6. Comparison of normalized Runge-Kutta stability regions with Adams-

Bashforth stability regions.

3-32

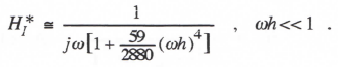

3.7 Power Series Integration Algorithms

When the derivatives of the state variables are functions that can be differentiated analytically, then the following power-series algorithm can be used for numerical integration of the state equation, dx/dt = f:

(3.126)

The order of the integration method depends on the number of terms utilized before the power series is truncated. Halin has been particularly successful in exploiting the power series method as an effective means of integrating nonlinear differential equations.* This type of integration is often used with variable-order integration formulas as well as variable step size. However, we will assume here that both the algorithm order and step size are fixed, and that the nonlinear differential equations have been linearized so that we can use the method of Z transforms to analyze the dynamic errors. The results of this analysis will give us insight into the dynamic performance of the power series method compared with other integration algorithms.

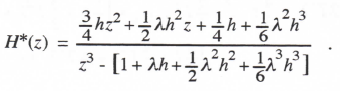

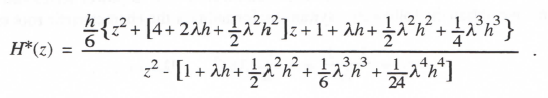

Taking the Z transform of Eq. (3.126) and assuming that x0 = 0, we obtain

(3.127)

To derive the power series integrator transfer function for a sinusoidal input data sequence, we replace z by ejωh and note that fn = ejωnh, n = jωejωnh = jωfn, n = (jω)2fn,, etc. It then follows that Eq. (3.127) becomes

Solving for X*/F* = HI*, the power series integrator transfer function, we obtain

(3.128)

where we have truncated the power series at the hk-1 term. Expanding ejωh in a power series and retaining terms to order hk , we obtain the following asymptotic formula for the power series integrator transfer function:

(3.129)

* See, for example, Halin, H.J., “Integration Across Discontinuities in Ordinary Differential Equations Using Power Series,” Simulation, Vol. 32, No. 2, February, 1979, pp 33-45.

3-33

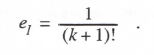

Comparison with Eq. (3.37) shows that the kth-order power series integration algorithm has an integrator error coefficient, eI, given by

(3.130)

Since the power series integration algorithm is a single-pass method, the value of eI in Eq. (3.130) can be used directly in all the asymptotic formulas of Section 3.3 for characteristic root errors, and for transfer function gain and phase errors.

To determine the stability region in the λh plane for power series integration, we apply the method to the first-order system given by Eq. (3.1) with the input u(t) = 0, i.e., dx/dt = f = λx. Noting that ḟ = λẋ = λ2x, = λ3x, etc., we can write the difference equation as

(3.131)

Here we have assumed a power series integration algorithm of order k. Taking the Z transform and solving for X*(z), we obtain

(3.132)

Comparison of the denominator of X*(z) in Eq. (3.132) for k = 2, 3 and 4 with the denominator of H*(z) in Eqs. (3.94), (3.116) and (3.122) for RK-2, 3 and 4 integration, respectively, we see that they are identical for the same k. Since the roots of the denominator of the Z transform determine the equivalent roots λ* of the digital simulation, it follows that the equivalent characteristic roots λ* for power series integration of order k are identical with the roots λ* for RK integration of order k when simulating the same linear system. It also follows for a given integration order k that the stability regions in the λh plane will be identical for the two methods. Thus the stability regions in Figure 3.5 apply equally well to the power series integration method.

However, since the power series method is a single-pass method, it is possible that it will execute much faster per overall integration step than the multiple-pass Runge-Kutta methods. On the other hand, if the derivative function f = dx/dt is a complex analytic function, the calculation of the derivatives of f may be very intensive, especially for a large order k of integration algorithm. This will slow down the power series method, so that it is not easy to draw general conclusions on which method will be faster. The speed tradeoff will be very problem dependent. Note also that it may be difficult, if not impossible, to apply the power series method to real time simulation, since the required higher derivatives of the real-time inputs will not in general be available. Furthermore, many real-time simulations involve derivative functions that are represented by multi-dimensional data tables rather than analytic functions. In this case it does not appear that the power series method is a candidate integration algorithm, since the required state-variable derivatives do not exist. Nevertheless, power series integration methods can be very efficient for simulating particular types of differential equations.

3-34

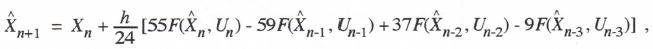

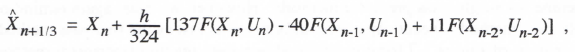

3.8 Adams-Moulton Predictor-corrector Algorithms

In Chapter 1 we noted that the AB predictor algorithms can be combined with implicit algorithms to mechanize the AM (Adams-Moulton) predictor-corrector integration algorithms. In this section we consider the AM-2, 3 and 4 methods in regard to characteristic root errors, and transfer-function gain and phase errors. We will also consider the extraneous roots introduced by these methods, as well as their overall stability region in the λh plane.

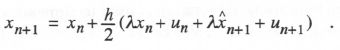

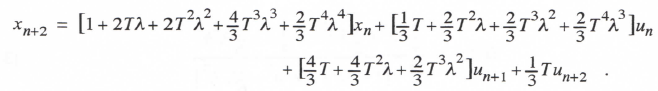

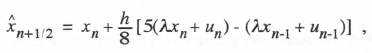

First we consider the second-order predictor-corrector method, AM-2. In Eqs. (1.19) and (1.20) the general form of the equations is presented. When we apply these equations to the first-order linear system given by Eq. (3.1), the following single difference equation is obtained:

(3.133)

Taking the Z transform and solving for H*(z) = X*(z)/U*(z), we have

(3.134)

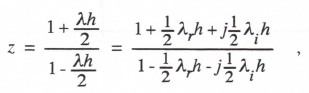

The two poles of H*(z) (i.e., the two roots of the denominator), z1 and z2, determine the equivalent characteristic roots. One of the two roots corresponds to the ideal characteristic root, λ, in accordance with the formula z1 = eλ*h. Here λ*, as before, represents the equivalent characteristic root of the digital system. Since the denominator of H*(z) in Eq. (3.134) is a quadratic in z, we can solve analytically for the two poles, z1 and z2, and thus determine the exact value of λ* as well as the exact value of the equivalent extraneous root. As noted earlier in Section 3.4, this extraneous root results from the dependence of xn+1 in the predictor formula on xn-1 as well as xn. A simple approximate analytic formula for the principal root λ* is obtained by replacing z with eλ*h in the denominator of H*(z), expanding eλ*h in a power series with terms to order h3 retained, and solving for λ*. When this is done, the formula for λ* and hence the fractional root error, eλ, agrees exactly with the result for 2nd-order implicit (i.e., trapezoidal) integration as given by Eq. (3.45) with eI = -1/12. Thus

(3.135)

Since eλ = -(5/12)λ2h2 for AB-2 integration, it follows that the characteristic root error for AB-2 is 5 times the error for AM-2 integration. On the other hand, the AM-2 algorithm requires two passes through the state equations per integration step. This means that AM-2 will in general take about twice as long to execute each integration step compared with AB-2. This results in double the step size for a given overall computation time and hence four times the root error, since both methods are second order. The net effect is to make the AB-2 root error 5/4 or 1.25 times the AM-2 error. On that basis we conclude that AM-2 enjoys a slight edge over AB-2 as a second-order algorithm. But AM-2 does have the disadvantage in real-time simulation of requiring the input un+1 for the second pass one-half frame before it is available in real time. As we shall see in the next section, this difficulty is removed in the real-time AM-2 predictor-corrector method.

3-35

As explained in the introductory paragraph of Section 3.3, the reason that the asymptotic error formulas developed for all single-pass integration algorithms, including implicit methods, apply equally well to the two-pass AM methods is that the local truncation error associated with the predictor pass is of order k +1 and therefore does not contribute to the global error of order k for the overall algorithm. In other words, to order hk it makes no difference whether the exact xn+1 or the estimate n+1 is used for the corrector formula in the second AM-2 pass. Thus the asymptotic formulas for characteristic root and transfer function errors when using AM-2 integration are identical with those noted earlier in Section 3.5 for the implicit methods. The errors are given by the single-pass formulas of Section 3.3 with eI = -1/12, -1/24 and -19/720, respectively, for AM-2,3 and 4 integration.

It follows, therefore, that the approximate asymptotic formulas (valid when ωh << 1) for transfer function gain and phase errors when using AM-2 integration to simulate Eq. (3.1) are given by Eq. (3.48) with eI = -1/12 and k = 2. Of course, the exact transfer function formula is still given by Eq. (3.134) with z replaced by ejωh. Note that H*(z) in Eq. (3.134) for AM-2 is not the same as H*(z) for trapezoidal in Eq. (3.90), even though the asymptotic formulas for transfer function gain and phase errors are the same.

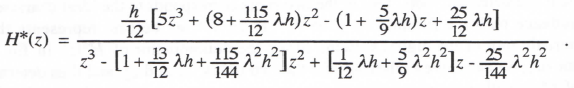

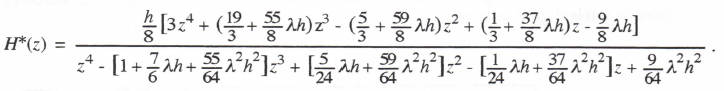

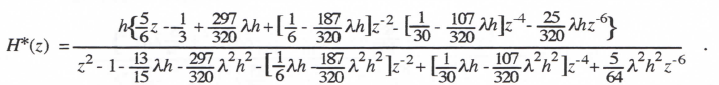

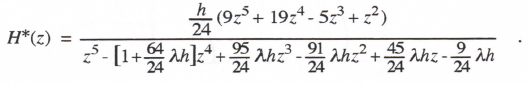

We can determine H*(z) for AM-3 when used to simulate the first-order system represented by H(s) = 1/(s – λ) by applying Eqs. (1.21) and (1.22) to Eq. (3.1), rewriting the result as a single difference equation, and taking the Z transform. When this is done, the following formula is obtained:

(3.136)

For a given λh the three poles of H*(z) can be used to calculate the equivalent characteristic roots for the digital system. One of these roots, λ*, corresponds to the ideal root, λ. The other two roots are extraneous. As noted above, the asymptotic formula for eλ, the fractional root error, is identical to the value for 3rd-order implicit integration and is therefore given by Eq. (3.45) with eI = -1/24 and k = 3. Thus for AM-3

(3.137)

Since eλ = – (3/8)λ3h3 for AB-3 integration, it follows that the characteristic root error for AB-3 is 9 times the error for AM-3 integration. However, we again note that the AM-3 algorithm requires two passes through the state equations per integration step. This means that AM-3 will in general take approximately twice as long to execute each integration step compared with AB-2. This results in double the step size for a given overall computation time and hence eight times the root error, since both methods are third order. The net effect is to make the AB-3 root error 9/8 or 1.125 times that of AM-3. On that basis we conclude that AM-3 has a slight edge over AB-3 as a third-order algorithm, except for its incompatibility with real-time inputs. Again, in the next section we will consider a real-time version of the AM-3 predictor-corrector method.

The approximate asymptotic formulas for transfer function gain and phase errors when using AM-3 integration to simulate Eq. (3.1) are given by Eq. (3.47) with eI = – 1/24 and k = 3.

3-36

The exact transfer function formula is given by Eq. (3.136) with z replaced by ejωh. Again we note that H*(z) in Eq. (3.136) for AM-3 is not the same as H*(z) for third-order implicit integration, even though the asymptotic formulas for transfer function gain and phase errors are the same.

Finally, we consider AM-4 simulation of the first-order system with H(s) = 1/(s – λ). By applying Eqs. (1.23) and (1.24) to Eq. (3.1), rewriting the result as a single difference equation, and taking the Z transform, we obtain the following formula for H*(z):

(3.138)

For a given λh the four poles of H*(z) can be used to calculate the equivalent characteristic roots for the digital system. One of these roots, λ*, corresponds to the ideal root, λ. The other three roots are extraneous. Again, the asymptotic formula for eλ, the fractional root error, is identical to the value for 4rd-order implicit integration and is therefore given by Eq. (3.45) with eI = -19/720 and k = 4. Thus for AM-4

(3.139)